Part 1 - UK AI regulation

This is Part 1 of 'Regulation of AI

From early 2023, messaging from the then UK Conservative-led government was that the UK would adopt a light touch approach to regulating AI. This was evident in the AI white paper published in March 2023 which outlined a principles based framework (see The Ethics of AI – the Digital Dilemma] for more information about the principles themselves and see here for additional coverage of the AI white paper). The UK government held a consultation on the AI white paper in 2023 and published a response on 6 February 2024 that adds slightly more flesh to the bones of the UK framework.

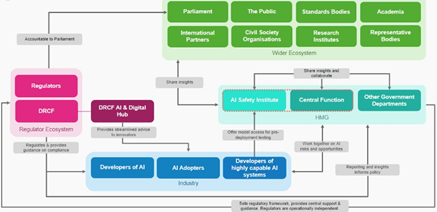

UK AI regulation landscape in Spring 2024 1

Existing regulators, using context-specific approaches, have been tasked with taking the lead in guiding the development and use of AI using the five principles. Such an approach could potentially lead to a lack of consistency in enforcement or some larger regulators dominating by interpreting the scope of their remit or role too broadly. Therefore, the government (via DSIT) aims to provide regulators with central support and monitoring (but without appointing a cross cutting AI regulator) including via a new steering committee containing government representatives and key regulators, to support knowledge exchange and coordination on AI governance.

A principles based, slightly wait and see approach means that practically, it's not straightforward for providers and deployers to be proactive in putting in place plans for compliance and navigation of what is potentially an AI minefield. For example, while developers of AI systems may undertake their own safety research, there is no common standard in quality or consistency. To deal with this, in its AI white paper and in its follow up Initial Guidance for Regulators, the government gave "phase one" guidance to regulators on implementation considerations. For example, on transparency and explainability regulators are expected to set explainability requirements and expectations on information sharing by AI lifecycle actors, and also to consider the role of available technical standards addressing AI transparency and explainability (such as IEEE 7001, ISO/IEC TS 6254, ISO/IEC 12792) to clarify regulatory guidance and support the implementation of risk treatment measures. The government has also released guidance on assurance mechanisms and global technical standards, aimed at both industry and regulators to enable the building and deployment of responsible AI systems. This includes the development of an AI Management System Standard, which is intended to help solve some of the implementation challenges of AI. This standard, ISO/IEC 4200 1:2023, published in December 2023, specifies requirements for establishing, implementing, maintaining, and continually improving an AI management system within organisations. It is designed for entities providing or utilising AI-based products or services.

The government's assurance guidance sits alongside other support in the area of standardisation for AI tech available from the UK AI Standards Hub, the Portfolio of AI Assurance Techniques (which includes various techniques including, for example, an interactive AI Audit Bot that allows creators of new and existing AI applications to check their products against existing and proposed regulations in a range of jurisdictions) and from the government's Spring 2024 “Introduction to AI assurance” guide, which aims to help businesses and organisations build their understanding of the techniques for safe and trustworthy systems.

Phase one guidance also suggested regulators put information on their AI plans into the public domain, collaborate with each other using cooperation forums, align approaches with other regulators on guidance and definitions, issue joint tools or guidance where possible, map which technical standards could help AI developers and deployers understand the principles in practice and cite them in tools and guidance. The previous government was intending to release phase two guidance by summer 2024 – it hasn't yet arrived.

Further policy, tools and guidance on risk and governance will come from bodies such as the AI Safety Institute (AISI), the AI Policy Directorate (the Office for Artificial Intelligence has now been subsumed into the Directorate), as well as the Responsible Technology Adoption Unit (was the Centre for Data Ethics and Innovation). The AISI is evaluating and testing new frontier AI systems (on a voluntary basis) aiming to characterise safety-relevant capabilities, understand the safety and security of systems, and assess their societal impacts, while developing its own technical expertise (see section on 'Bletchley Declaration' below). If it identifies potentially dangerous capability through its evaluation of advanced AI systems, it will address risks by engaging the developer on suitable safety mitigations.

Four key regulators are leading the way on implementing the framework, for example by issuing guidance on best practice for adherence to the principles, under the umbrella of the Digital Regulation Cooperation Forum (DRCF): the Information Commissioner's Office (ICO), Ofcom, the Competition and Markets Authority (CMA) and the Financial Conduct Authority. From within the DRCF, the AI and Digital Hub is, via a pilot, advising innovators on AI regulatory compliance in an aim to navigate conflicting regulations that span the regulatory remits of DRCF member regulators. Its initial term will terminate in April 2025 and it is as yet unclear whether it will be extended.

Some early activity from the regulators includes a first set of guidance from the ICO published in 2020 which was followed by an AI and Data Protection risk toolkit in 2022. The AI toolkit aims to help organisations identify and mitigate risks during the AI lifecycle. In March 2023, the ICO published updated guidance on AI and data protection on how to apply the concepts of data protection law when developing or deploying AI, including generative AI. This followed requests from UK industry to clarify requirements for fairness in AI. In January 2024, the ICO published a four part consultation series on generative AI and data protection which it then responded to in December 2024. In January 2025, the ICO announced that it will publish a 'single set of rules' for those developing or using AI and will support the government in legislating for such rules to become a statutory Code of Practice on AI. The ICO also runs an Innovation advice service.

The CMA launched an initial review into FMs in May 2023 to consider the rapidly emerging AI markets from a competition and consumer protection perspective. In September 2023, the CMA published an update report including its 'guiding principles'. The CMA's overarching principle is one of accountability for AI outputs provided to consumers by AI developers and deployers. Its further guiding principles relate to access, diversity, choice, fair-dealing and transparency. In the second stage of its AI review, the CMA published:

- An AI Foundation Models update paper (11 April 2024)

- An AI Foundation Models technical update report (16 April 2024)

- An AI strategic update (29 April 2024)

- A progress update on its market investigation into the supply of public cloud infrastructure services (28 February 2025)

The Digital Markets, Competition and Consumers Act, in force from 2025, gives the CMA additional tools to identify and address any competition issues in AI markets and other digital markets affected by recent developments in AI.

The FCA also published its strategic update in April 2024. In its update it referred to work on AI already undertaken and its published documents on AI. Jointly with the Bank of England it has published: the AI Discussion Paper (AI DP) (2022), the Feedback Statement (2023), the AI Public-Private Forum Final Report (2022), and the 2019 and 2022 machine learning surveys. It has also published a response to its Call For Input on the data asymmetry between Big Tech and traditional financial services firms. In October 2024, the FCA launched its AI Lab to provide a pathway for the FCA, firms and wider stakeholders to engage in AI-related insights, discussions and case studies. In November 2024, the FCA and Bank of England published their third survey of AI and machine learning

Following the passing of the Online Safety Act (OSA) last year, Ofcom as the online safety regulator, is the regulator for services that are at the forefront of AI adoption. It is in the process of getting the new online safety regime up and running and a key feature of the regime relates to embedding proactive risk management as part of an organisation's broader approach to governance and compliance when integrating generative AI tools which might fall into scope of the OSA, with user safety being recognised and represented at all levels. With a special focus on generative AI, Ofcom's current activity is largely in research, monitoring, discussion and review.

A number of key regulators (not just DRCF) were asked by the government to each publish an update outlining their strategic approaches to AI by April 2024, to cover:

- an outline of the steps they are taking in line with the expectations set out in the white paper;

- analysis of AI-related risks in the sectors and activities they regulate and the actions they are taking to address these;

- an explanation of their current capability to address AI as compared with their assessment of requirements, and the actions they are taking to ensure they have the right structures; and skills in place; and

- a forward look of plans and activities over the coming 12 months.

The updates varied in their breadth and level of detail with some common themes on prospective activities: research into consumer use of AI and cross-sector adoption of generative AI technology by organisations; and a focus on collaboration with the other regulators, the government, standards bodies and international partners.

The Private Members' Artificial Intelligence (Regulation) Bill that was introduced to the House of Lords in November 2023 under the previous government is also worth a mention. The Bill created an AI Authority that would collaborate with relevant regulators to construct regulatory sandboxes for AI. The Bill did not make it through Parliamentary wash-up following the former prime minister's announcement of the July 2024 UK general election but has been reintroduced to the House of Lords in March 2025.

In January 2025, the government published its AI Opportunities Action Plan to ramp up AI adoption across the UK. Key initiatives include new AI Growth Zones to build more AI infrastructure, increasing the public compute capacity 20x, and creating a new National Data Library to harness the value in public data and support AI development.

The government is currently preparing its AI Bill which will target the "most advanced AI models" such as ChatGPT. It aims to make existing voluntary commitments between companies (such as those agreed at the UK's Safety Summit) and the government legally binding and to turn the UK’s AI Safety Institute into an arm’s length government body. The Bill was expected to be published in late 2024 but has been delayed until Summer 2025 to allow the government to align with the Trump administration's more extreme pro-innovation stance in the US.

1 Contains public sector information licensed under the Open Government Licence v3.0

Discover more insights on the AI guide

Stay connected and subscribe to our latest insights and views

Subscribe Here